Logistic Regression

Logistic Regression

Logistic Regression

Logistic Regression is analogous to multiple linear regression, except the outcome is binary. Contrary to its name, logistic regression is a classification method, and is very powerful when it comes to text-based classification. It achieves this by first performing regression on a logistic function, hence the name. Due to its fast computational speed and its output of a model that lends itself to rapid scoring of new data, it is a popular method.

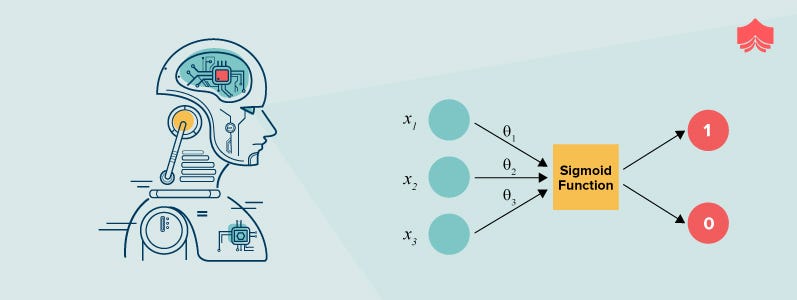

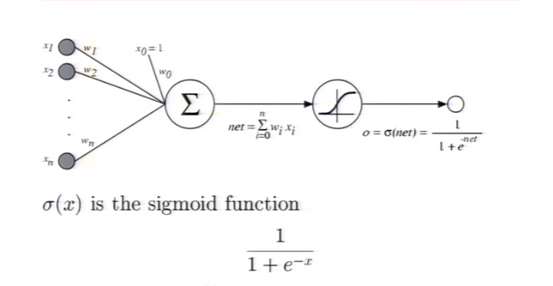

Question arises that how do we get from a binary outcome variable to an outcome variable that can be modeled in linear fashion, then back again to a binary outcome? Just like a Linear Regression model, a Logistic Regression model computes a weighted sum of the input features (plus a bias term), but instead of outputting the result directly like the linear regression model does, it outputs the logistic of this result.

So basically its first calculates the weights and intercept just like linear regression and then this result is passed through a function known as the logistic or sigmoid function which limits the output between 0 and 1.

The output (p) ranges between 0 and 1; and if Y=1; if p ≥ 0.5 and 0; if p ≤ 0.5

Training and Cost Function

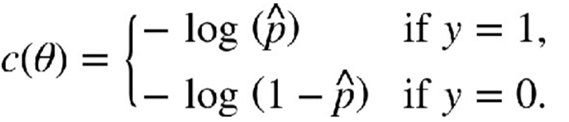

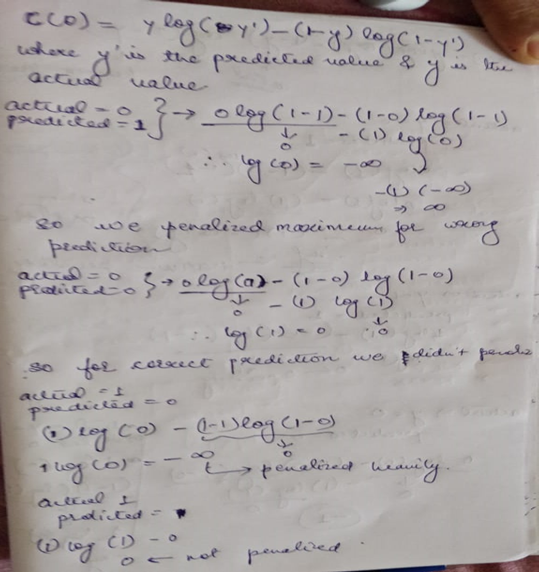

Now we know how a logistic regression model estimate probabilities and make predictions. But how is it trained? The objective of training a model is to estimate high probabilities for positive instances(y = 1) and low probabilities for negative instances (y = 0)

The above equation is the cost function for the single training instance. This equation makes sense as we want to penalize heavily for every wrong prediction.

The cost function over the whole training set is simply the average cost over all training instances.

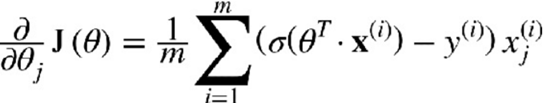

Now our goal is to find θ that minimizes this cost function but the bad news is that there is no known closed-form equation to compute it. But the good news is that this cost function is convex, so Gradient Descent (or any other optimization algorithm) is guaranteed to find the global minimum.

For each instance it computes the prediction error and multiplies it by the jth feature value, and then it computes the average over all training instances. Once you have the gradient vector containing all the partial derivatives you can use it in the Batch Gradient Descent algorithm. That’s it: you now know how to train a Logistic Regression model.

Glossary Alert

· Binary: Involving a choice between or condition of two alternatives only.

· Closed-Form Equation : An equation is said to be a closed-form solution if it solves a given problem in terms of functions and mathematical operations from a given generally accepted set

· Convex Function: Consider a function y=f(x), which is assumed to be continuous on the interval [a,b]. The function y=f(x) is called convex downward (or concave upward) if for any two points x1 and x2 in [a,b], the following inequality holds:

f((x1+x2)/2) ≤ (f(x1) + f(x2))/2

· Cost Function: It is a function that measures the performance of a machine learning model for given data. Cost Function quantifies the error between predicted values and expected values and presents it in the form of a single real number.

You can check my github link for Logistic Regression implementation on a real-world dataset- https://github.com/akshayakn13/Logistic-Regression

Check my other articles on machine learning :

Linear Regression from scratch.

Web-Scraping with Beautiful Soup

How I started my journey as Machine Learning enthusiast

External Resources to learn more about Logistic Regression

Hands-On Machine Learning with Scikit-Learn and TensorFlow- Aurélien Géron.

Practical Statistics for Data Scientists-Peter Bruce and Andrew Bruce

Building Machine Learning Systems with Python- Willi Richert Luis Pedro Coelho

Comments

Post a Comment